Hello everyone,

Thank you for providing your concerns regarding the unrestricted AI use rule change. The main goal of publishing these rule changes before releasing the final rules is to find the community opinion and being able to have a discussion between competitors, mentors, region representatives, volunteers and committee members so we can shape the future of the competition in the best way possible, so please continue sharing your thoughts either if you like/prefer a rule change or disagree with it.

I will start with addressing this generally and then covering the specific points of concern on this thread. The RCJ Rescue Committee saw a necessity to implement a change regarding AI use after these findings over the last two years:

- It is really hard to differentiate code created by a competitor from code generated by AI and the competitor learning how it was implemented. This is creating a scenario that is very difficult to enforce and allowing a pseudo way of playing the system.

- Hard to establish a line between what is allowed vs what is not allowed. What is the difference between an AI-based sensor compared with a regular sensor that has a public library that is already doing a lot of the processing or a development platform that does a lot of the processing (for example, in Lego color sensor already telling you the color)?

- There are websites/services/people where you can pay someone else to train an AI model only providing the training photos. These provide the source code of the model. Anyone with a basic AI understanding will be able to explain the code without fully understanding how it is implemented. It is really hard to enforce in an objective way if a model is implemented by the student from scratch or if they are using a base model and modify it tweaking parameters to understand its use.

- We require more experts to understand the teams’ solutions, evaluating the source code of each implementation. This has become a “find the cheater” approach instead of encouraging collaboration and innovation. We believe that if we need tournament organizers to constantly understand every team’s code to make sure they are not cheating or breaking a rule, we are doing it wrong and it will be unfair depending on who is evaluating your work.

- AI tools are gaining popularity in the world. There is a big push in today’s society to embrace AI and learn how to leverage it to learn more effectively and innovate faster. The industry and graduate programs are looking for ways to implement AI technology in their development. For example, unless your goal is to create a faster/better motion detection program, if your application needs to use motion detection you could use a paid/open-source solution and be able to focus on the rest of the project.

With that being said, we want to remember the RoboCupJunior goals. We want to provide a learning experience for teams to get challenged, being able to build on their knowledge year over year and innovate so the teams can transition to bigger challenges over time (for example, only allowing them to participate in RCJ Rescue Line twice or encouraging students to transition to RoboCup). To allow unrestricted use of AI we want to achieve the following:

- Encourage teams to openly give credit to the resources they use. Considering that it is not cheating, we want teams to embrace collaboration and being open about their approach to the challenge. If something works for one team, let’s make it work for everyone!

- Increase the competition level. We want to reduce the entry barrier difficulty, encouraging teams to be able to score more in the competition and learn from the best teams in the process.

- We want to develop a challenging competition where pre-existing solutions won’t be enough to solve the challenge and encourage teams to innovate. In the past, we were able to make the competition challenging enough to encourage teams to transition from platforms like Lego to platforms like Arduino and Raspberry-Pi, not because they can’t be solved with a Lego platform, but because moving to a more sophisticated platform allowed the teams to solve the problem more effectively or faster. Our goal with AI is similar, we want to allow pre-existing solutions like the use of AI-based sensors, but create big challenges that teams that transition to develop their own models will be able to solve in a better way.

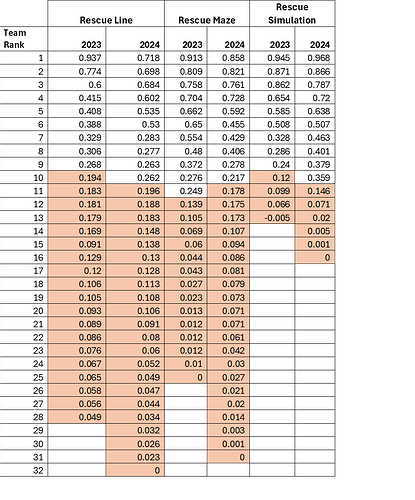

You might be thinking, if this is the overall goal, why are we not seeing a lot of rule changes to increase the competition difficulty? Looking at the data from the last 2 years you can have a better understanding that the competition is challenging enough, where really few teams achieved to score considerable amount of points and completed the difficult hazards, having just a few achieving “perfect scores”. With the normalized scores, we can see that the vast majority of teams are scoring less than 0.2 out of 1, with the exception being on RCJ Rescue Simulation, where most teams have been able to successfully navigate the areas 3 and 4.

Therefore, with these year’s changes, we want to see what teams develop and how they adapt to this rule change. We want to increase the competition level and see more teams scoring and we wanted to be more flexible for field designers so they can create difficult challenges based on the teams attending their competitions without making it more expensive or difficult to build.

With this context I hope you can better understand the reasoning behind our decision and multiple hour discussion among the committee members and execs, looking to offer a better RCJ Rescue competition. Please, continue providing your opinion in the comments below and even better, if you have alternatives to overcome the different challenges we addressed here. If we are able to find the best solution as a community it will be very rewarding.

Regards,

Diego Garza Rodriguez on behalf of the 2025 RCJ Rescue Committee